Invited Speakers

- Dhruv Batra, GaTech/FAIR

- Joyce Chai, Michigan State University

- Cynthia Matuszek, UMBC

- Raymond J. Mooney, UT Austin

- Martha Palmer, CU Boulder

- Matthias Scheutz, Tufts

- Stefanie Tellex, Brown

Schedule

| 8:30-8:40 | Opening remarks | Workshop Chairs |

| 8:40-9:00 | Poster Spotlight (1 min madness) | |

| 9:00-9:45 | Invited Talk: Collaboration in Situated Language Communication | Joyce Chai |

| 9:45-10:30 | Invited Talk: Talking to the BORG: Task-based Natural Language Dialogues with Multiple Mind-Sharing Robots | Matthias Scheutz |

| 10:30-11:00 | Coffee Break | |

| 11:00-11:45 | Invited Talk: The Blocks World Redux | Martha Palmer |

| 11:45-12:30 | Invited Talk: Learning Models of Language, Action and Perception for Human-Robot Collaboration | Stefanie Tellex |

| 12:30-2:00 | Lunch | |

| 2:00-2:45 | Invited Talk: Habitat: A Platform for Embodied AI Research | Dhruv Batra |

| 2:45-3:30 | Invited Talk: Learning Grounded Language Through Non-expert Interaction | Cynthia Matuszek |

| 3:30-4:00 | Coffee Break | |

| 4:00-4:45 | Invited Talk: A Review of Work on Natural Language Navigation Instructions | Raymond Mooney |

| 4:45-5:15 | Best Paper Oral Presentations

SpatialNet: A Declarative Resource for Spatial Relations Morgan Ulinski, Bob Coyne and Julia Hirschberg Multi-modal Discriminative Model for Vision-and-Language Navigation Haoshuo Huang, Vihan Jain, Harsh Mehta, Jason Baldridge and Eugene Ie |

|

| 5:15-6 | Poster Session |

Proceedings

- SpatialNet: A Declarative Resource for Spatial Relations (Best Paper)

Morgan Ulinski, Bob Coyne and Julia Hirschberg - Multi-modal Discriminative Model for Vision-and-Language Navigation (Best Paper)

Haoshuo Huang, Vihan Jain, Harsh Mehta, Jason Baldridge and Eugene Ie - Corpus of Multimodal Interaction for collaborative planning

Miltiadis Marios Katsakioris, Helen Hastie, Ioannis Konstas and Atanas Laskov - ¿Es un plátano? Exploring the Application of a Physically Grounded Language Acquisition System to Spanish

Caroline Kery, Cynthia Matuszek and Francis Ferraro - Learning from Implicit Information in Natural Language Instructions for Robotic Manipulations

Ozan Arkan Can, Pedro Zuidberg Dos Martires, Andreas Persson, Julian Gaal, Amy Loutfi, Luc De Raedt, Deniz Yuret and Alessandro Saffiotti - Semantic Spatial Representation: a unique representation of an environment based on an ontology for robotic applications

Guillaume Sarthou, Aurélie Clodic and Rachid Alami - From Virtual to Real: A Framework for Verbal Interaction with Robots

Eugene Joseph - What a neural language model tells us about spatial relations

Mehdi Ghanimifard and Simon Dobnik

Cross Submissions

- Deep Learning for Category-Free Grounded Language Acquisition

Nisha Pillai, Francis Ferraro and Cynthia Matuszek - Improving Natural Language Interaction with Robots Using Advice

Nikhil Mehta and Dan Goldwasser - Improving Robot Success Detection using Static Object Data

Rosario Scalise, Jesse Thomason, Yonatan Bisk and Siddhartha Srinivasa - Learning to Generate Unambiguous Spatial Referring Expressions for Real-World Environments

Fethiye Irmak Dogan, Sinan Kalkan and Iolanda Leite - Learning to Ground Language to Temporal Logical Form

Roma Patel, Stefanie Tellex and Ellie Pavlick - Learning to Navigate Unseen Environments: Back Translation with Environmental Dropout

Hao Tan, Licheng Yu and Mohit Bansal - Learning to Parse Grounded Language using Reservoir Computing

Xavier Hinaut and Michael Spranger - Reinforced Cross-Modal Matching and Self-Supervised Imitation Learning for Vision-Language Navigation

Xin Wang, Qiuyuan Huang, Asli Celikyilmaz, Jianfeng Gao, Dinghan Shen, Yuan-Fang Wang, William Yang Wang and Lei Zhang - A Research Platform for Multi-Robot Dialogue with Humans

Matthew Marge, Stephen Nogar, Cory Hayes, Stephanie M. Lukin, Jesse Bloecker, Eric Holder and Clare Voss - From Spatial Relations to Spatial Configurations

Soham Dan, Parisa Kordjamshidi, Julia Bonn, Archna Bhatia, Martha Palmer and Dan Roth - Tactical Rewind: Self-Correction via Backtracking in Vision-and-Language Navigation

Liyiming Ke, Xiujun Li, Yonatan Bisk, Ari Holtzman, Zhe Gan, Jingjing Liu, Jianfeng Gao, Yejin Choi and Siddhartha Srinivasa - UniVSE: Robust Visual Semantic Embeddings via Structured Semantic Representations

Hao Wu, Jiayuan Mao, Yufeng Zhang, Yuning Jiang, Lei Li, Weiwei Sun and Wei-Ying Ma

Overview and Call For Papers

SpLU-RoboNLP 2019 is a combined workshop on spatial language understanding (SpLU) and grounded communication for robotics (RoboNLP) that focuses on spatial language, both linguistic and theoretical aspects and its application to various areas including and especially focusing on robotics. The combined workshop aims to bring together members of NLP, robotics, vision and related communities in order to initiate discussions across fields dealing with spatial language along with other modalities. The desired outcome is identification of both shared and unique challenges, problems and future directions across the fields and various application domains.

While language can encode highly complex, relational structures of objects, spatial relations between them, and patterns of motion through space, the community has only scratched the surface on how to encode and reason about spatial semantics. Despite this, spatial language is crucial to robotics, navigation, NLU, translation and more. Standardizing tasks is challenging as we lack formal domain independent meaning representations. Spatial semantics requires an interplay between language, perception and (often) interaction.

Following the exciting recent progress in visual language grounding, the embodied, task-oriented aspect of language grounding is an important and timely research direction. To realize the long-term goal of robots that we can converse with in our homes, offices, hospitals, and warehouses, it is essential that we develop new techniques for linking language to action in the real world in which spatial language understanding plays a great role. Can we give instructions to robotic agents to assist with navigation and manipulation tasks in remote settings? Can we talk to robots about the surrounding visual world, and help them interactively learn the language needed to finish a task? We hope to learn about (and begin to answer) these questions as we delve deeper into spatial language understanding and grounding language for robotics.

The major topics covered in the workshop include:

- Spatial Language Meaning Representation (Continuous, Symbolic)

- Spatial Language Learning and Reasoning

- Multi-modal Spatial Understanding

- Instruction Following (real or simulated)

- Grounded or Embodied tasks

- Datasets and evaluation metrics

- Spatial meaning representations, continuous representations, ontologies, annotation schemes, linguistic corpora.

- Spatial information extraction from natural language.

- Spatial information extraction in robotics, multi-modal environments, navigational instructions.

- Text mining for spatial information in GIS systems, geographical knowledge graphs.

- Spatial question answering, spatial information for visual question answering

- Quantitative and qualitative reasoning with spatial information

- Spatial reasoning based on natural language or multi-modal information (vision and language)

- Extraction of spatial common sense knowledge

- Visualization of spatial language in 2-D and 3-D

- Spatial natural language generation

- Spatial language grounding, including the following:

- Aligning and Translating Language to Situated Actions

- Simulated and Real World Situations

- Instructions for Navigation

- Instructions for Articulation

- Instructions for Manipulation

- Skill Learning via Interactive Dialogue

- Language Learning via Grounded Dialogue

- Language Generation for Embodied Tasks

- Grounded Knowledge Representations

- Mapping Language and World

- Grounded Reinforcement Learning

- Language-based Game Playing for Grounding

- Structured and Deep Learning Models for Embodied Language

- New Datasets for Embodied Language

- Better Evaluation Metrics for Embodied Language

Camera-ready details

Archival track camera-ready papers should be prepared with NAACL style, either 9 pages without references (long papers) or up to 5 pages without references (short papers). Please make submissions via softconf here by April 8.Non-archival track camera-ready papers should be uploaded online (e.g., to arxiv), and links to those camera-ready copies sent to the organizing committee at splu-robonlp-2019@googlegroups.com

Submission details

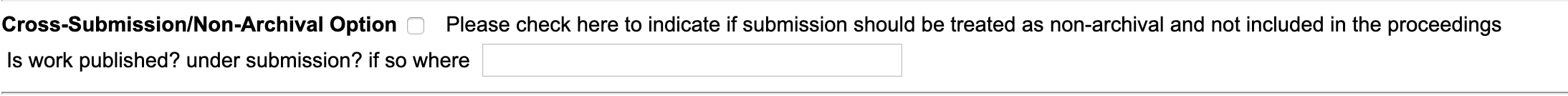

We encourage contributions with technical papers (NAACL style, 8 pages without references) or shorter papers on position statements describing previously unpublished work or demos (NAACL style, 4 pages maximum). NAACL Style files are available here. Please make submissions via Softconf here.Non-Archival option: NAACL workshops are traditionally archival. To allow dual submission of work to SpLU-RoboNLP and other conferences/journals, we are also including a non-archival track. Space permitting, these submissions will still participate and present their work in the workshop, will be hosted on the workshop website, but will not be included in the official proceedings. Please submit through softconf but indicate that this is a cross submission at the bottom of the form:

Best Papers

We will present multiple best paper awards.ACL Anti-Harassment Policy

Important Dates

- Submission Deadline: March 11, 2019 (11:59pm Anywhere on Earth time, UTC-12)

- Notification: March 28, 2019

- Camera Ready deadline: April 8, 2019

- Workshop Day: June 6, 2019

Organizers and PC

Organizers

| University of Rochester, IHMC | jallen@ihmc.us | |

| Semantic Machines/MIT | jda@cs.berkeley.edu | |

| jasonbaldridge@google.com | ||

| UNC Chapel Hill | mbansal@cs.unc.edu | |

|

|

IHMC | abhatia@ihmc.us |

| University of Washington | ybisk@yonatanbisk.com | |

| Microsoft Research | asli.ca@live.com | |

|

|

IHMC | bdorr@ihmc.us |

|

|

Tulane University, IHMC | pkordjam@tulane.edu |

| Army Research Lab | matthew.r.marge.civ@mail.mil | |

| University of Washington | thomason.jesse@gmail.com |

Program Committee

| Rutgers University | |

| Cornell University | |

| University of Rochester | |

| Universität Bremen | |

| Örebro University | |

| Tel-Aviv University | |

| University of Trento | |

| University of Arizona | |

| University of Groningen | |

| CMU | |

| KU Leuven | |

| Michigan State University | |

| Stanford University | |

| CLASP and FLOV, University of Gothenburg Sweden | |

| University of Zurich | |

| Universität Bremen | |

| UCSF | |

| IHMC | |

| Tufts | |

| University of Washignton | |

| Boise State University | |

| Semantic Machines | |

| Army Research Laboratory | |

| Cornell | |

| Cornell University | |

| KU Leuven | |

| University of Texas | |

| Microsoft | |

| Tilburg University, The Netherlands | |

| UT Austin | |

| University of Illinois Chicago | |

| IHMC | |

| Brandeis University | |

| University of Michigan | |

| Stanford | |

| The University of Texas | |

| UC Berkeley | |

| FAIR | |

| Princeton University | |

| Tufts | |

| Massey University of New Zealand | |

| Cornell | |

| ARL | |

| University of California Santa Barbara | |

| SUNY Binghamton | |

| University of Washington |

Past Workshops

Sponsors